On June 4, followers of the Houston Museum of Natural Science (HMNS) witnessed something that upset conventional wisdom about social media security. The museum’s verified Instagram account—complete with its checkmark and institutional credibility—was hijacked and used to promote a fake Bitcoin giveaway featuring deepfake videos of Elon Musk promising $25,000 in BTC.

Within hours, the museum regained control and deleted the posts. But the damage exposed a harsh reality: everything we’ve been told about protecting ourselves from crypto scams is becoming quickly outdated.

The Uncomfortable Truth About “Best Practices”

For years, security experts have preached the same mantras: “Only follow verified accounts,” “Trust established institutions,” and “Be skeptical of get-rich-quick schemes.” And it’s sound advice for things like the cloud mining and dating scams we’re all familiar with. Today, however, the HMNS incident proves these guidelines are not just insufficient—they’re actually creating a false sense of security that scammers are exploiting with surgical precision.

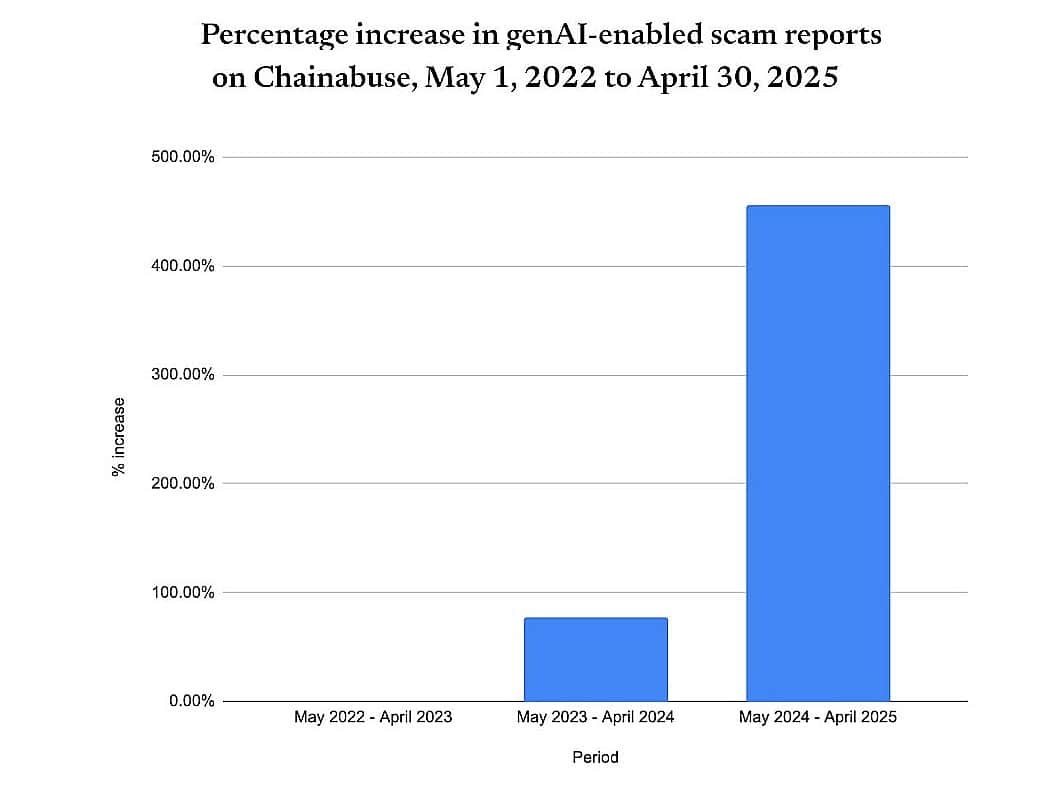

According to Chainabuse, reports of scams utilizing generative artificial intelligence rose by 456% from May 2024 to April 2025 compared to the previous year. The year before already showed a 78% spike. This isn’t just growth—it’s exponential acceleration that traditional security advice never anticipated.

Source: TRM Labs

The problem isn’t that people are ignoring security advice. The problem is that the advice itself was built for a world that no longer exists.

Why This Problem Is Worse Than Social Platforms Admit

Social media platforms have a vested interest in downplaying the severity of AI-powered scams. Every admission of vulnerability threatens user confidence and, more importantly, advertiser confidence. But the numbers tell a different story than platform PR departments want you to hear.

Recent attacks reveals a sophistication and scope platforms won’t acknowledge. In January 2025, both NBA and NASCAR league accounts were compromised simultaneously, pushing fake “$NBA coin” and “$NASCAR coin” promotions. This wasn’t random—it was coordinated. The same month, the UFC’s official Instagram account fell victim to a fraudulent crypto campaign tagged “#UnleashTheFight.”

What platforms won’t tell you is that these attacks succeeded not despite their security measures, but because their security measures are fundamentally mismatched against AI-powered threats. Traditional account security was designed to stop human hackers using human methods. AI-powered attacks operate at machine speed with machine precision, exploiting vulnerabilities faster than human-designed systems can respond. Meta, X, and other platforms remain deliberately reactive because proactive measures would require admitting the true scale of the problem—and the substantial infrastructure investment needed to address it.

Here’s what security experts won’t tell you: social media platforms have economic incentives that directly conflict with scam prevention. Engagement-driven algorithms amplify controversial content, including scam content that generates comments, shares, and reactions. The same systems that make platforms profitable make them vulnerable to exploitation. Effective scam prevention would require platforms to sacrifice some engagement for security—a trade-off that shareholders won’t accept without regulatory pressure.

The Real Reason Museums and Sports Teams Are Prime Targets (It’s Not What You Think)

Conventional wisdom suggests hackers target these accounts for their follower counts and credibility. But this misses the deeper, more troubling reason: these institutions represent human curation at its most trusted level.

When a crypto promotion appears on a museum’s account, followers don’t just see an endorsement—they see what appears to be institutional vetting. Museums, sports teams, and cultural institutions occupy a unique psychological space where audiences reasonably assume content has been reviewed by responsible human gatekeepers.

This isn’t about reaching large audiences. It’s about exploiting trust architectures that took decades to build. A crypto scam on a random influencer’s account faces natural skepticism. The same scam on the Vancouver Canucks’ account (hijacked on May 5th) or a NASCAR account bypasses that skepticism entirely. The selection of these targets reveals sophisticated psychological profiling that traditional security frameworks never considered. Scammers aren’t just stealing accounts—they’re hijacking decades of institutional trust-building.

Why Current Solutions Won’t Work

The crypto industry’s response to AI-powered scams follows a predictable pattern: more education, better verification, stronger passwords. This approach fundamentally misunderstands the problem.

Current solutions assume the vulnerability lies with users who need better training or platforms that need better detection. Recent hacks at Coinbase, Bitopro and Cetus have all seen the platform follow up with the same tired formula of press releases about ‘enhanced’ security precautions going forward. It’s always been ‘too little, too late’ but nowadays the criminal’s toolkit has expanded exponentially. AI-powered scams are exploiting something deeper—the cognitive shortcuts that make digital communication possible in the first place.

When you see content from a verified account you trust, your brain engages in what psychologists call “cognitive offloading”—delegating verification to the platform and institution. This isn’t a bug in human cognition; it’s a feature that allows us to process thousands of digital interactions daily without exhausting our mental resources.

Traditional security advice asks people to disable this cognitive architecture entirely—to treat every interaction with maximum skepticism. This isn’t just impractical; it’s psychologically impossible at scale, but for now, it really is all we’ve got.

The Authentication Theater Problem

Two-factor authentication, account verification, and platform badges represent what security researchers increasingly call “authentication theater”—visible security measures that provide psychological comfort while offering minimal protection against sophisticated attacks.

The HMNS incident demonstrated how verification badges can actually increase vulnerability. They didn’t just fail to prevent the attack—it amplified the attack’s effectiveness by lending institutional credibility to fraudulent content. This creates a paradox that traditional security advice can’t resolve: the same mechanisms designed to establish trust become weapons for exploiting it.

What Traditional Advice Gets Wrong About AI

Standard crypto security advice treats AI as simply a more sophisticated version of existing threats. This fundamentally misjudges the qualitative shift AI represents.

Previous crypto scams required human creativity, manual social engineering, and time-intensive content creation. AI-powered scams operate continuously, testing thousands of variations simultaneously, learning from each interaction, and evolving in real-time. When traditional advice suggests “being skeptical of too-good-to-be-true offers,” it assumes static scam tactics. But AI-powered scams dynamically adjust their promises, timing, and presentation based on target response patterns. The “too-good-to-be-true” threshold becomes a moving target that adapts faster than human awareness can follow.

The Deepfake Dilemma

Deepfake technology has reached a sophistication level where traditional “red flags” no longer apply. The Elon Musk deepfakes used in recent scams aren’t just convincing—they’re indistinguishable from authentic content to most viewers. The crypto industry’s response—asking users to become amateur forensic analysts—shifts responsibility in a way that’s both unfair and ineffective.

Conclusion

The failure of traditional crypto security advice isn’t accidental—it’s structural. These approaches emerged when crypto scams were human-scale problems requiring human-scale solutions. AI-powered scams represent a category change that requires entirely new frameworks.

Effective protection against AI-powered crypto scams requires acknowledging uncomfortable truths about the limitations of individual vigilance, the inadequacy of current platform security, and the need for systemic rather than behavioral solutions.

The Houston Museum of Natural Science incident wasn’t just another data point in rising crypto scam statistics. It was a preview of a future where traditional security advice doesn’t just fail—it creates the very vulnerabilities it claims to prevent. Until we’re honest about these realities, we’ll continue fighting tomorrow’s threats with yesterday’s tools.

16 hours ago

1

16 hours ago

1

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·